利用ansible快速部署k8s业务系统

[TOC]

1.方案规划

1.1.限制条件

- 如果以二进制方式安装 kubectl或kubeadm,则必须升级linux内核到 4.18+ 及以上

- 建议将第2块数据盘统一挂载为 /data

- harbor及rancher节点需装docker,务必调整docker默认路径到容量最大的分区上

1.2.资源规划 ✔

- 考虑到harbor节点磁盘空间较大,遂将nfs-server服务与harbor部署在一起

| 节点ip |

角色 |

操作系统 |

最低配置 |

| 10.30.3.201 |

harbor,ntp,nfs |

银河麒麟v10sp3 |

4C-16G-40G+400G |

| 10.30.3.202 |

rancher |

银河麒麟v10sp3 |

4C-16G-40G+100G |

| 10.30.3.203 |

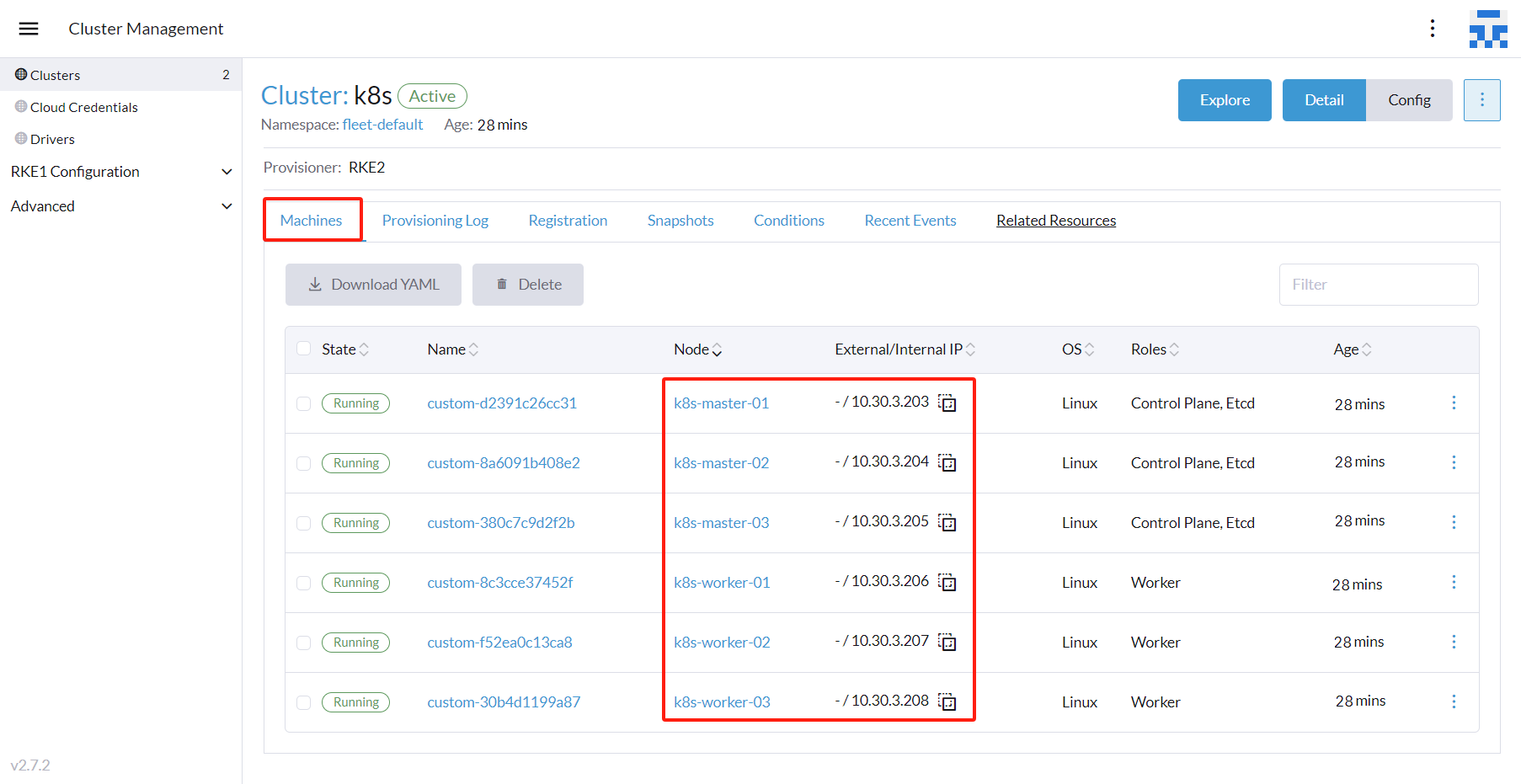

k8s-master-01 |

银河麒麟v10sp3 |

8C-16G-40G+100G |

| 10.30.3.204 |

k8s-master-02 |

银河麒麟v10sp3 |

8C-16G-40G+100G |

| 10.30.3.205 |

k8s-master-03 |

银河麒麟v10sp3 |

8C-16G-40G+100G |

| 10.30.3.206 |

k8s-worker-01 |

银河麒麟v10sp3 |

4C-16G-40G+200G |

| 10.30.3.207 |

k8s-worker-02 |

银河麒麟v10sp3 |

4C-16G-40G+200G |

| 10.30.3.208 |

k8s-worker-03 |

银河麒麟v10sp3 |

4C-16G-40G+200G |

1.3.软件清单

| 软件名称 |

业务A |

业务B |

业务C |

公共 |

| docker |

- |

- |

- |

24.0.1 |

| docker-compose |

- |

- |

- |

2.18.1 |

| harbor |

- |

- |

- |

2.3.4 |

| rancher |

- |

- |

- |

2.7.2 |

| Kubeneters |

- |

- |

- |

1.18.20 |

| mysql |

8.0.22 |

8+ |

- |

- |

| mongodb |

6.0.5 |

6+ |

- |

- |

| redis |

6.0.8 |

6+ |

- |

- |

| minio |

2023-06-29 |

- |

- |

- |

| nacos |

2.x |

2.1 |

- |

- |

| dolphinscheduler |

3.1.1 |

- |

- |

- |

| apisix |

3.6 |

- |

- |

- |

| rocketmq |

4.8 |

- |

- |

- |

| seata |

1.4.2 |

1.4.2 |

- |

- |

| flink |

1.15 |

- |

- |

- |

| kafka |

3.4.0 |

- |

- |

- |

| flowable |

- |

- |

- |

6.4.2 |

2.准备工作

2.1.管理节点 - 创建centos容器作为ansible管理机 ✔

- 1.为了不污染环境,建议挑选1台机器安装docker并创建容器作为ansible管理机,示例

10.30.3.200

ssh -p22 root@10.30.3.200

- 2.在该机器上,将

xbank.iso 拷贝到 /opt 目录,将 /opt/xbank.iso 挂载到 /xbank(部署过程写死路径,不建议更改)

curl -L http://10.30.4.44/fusion/k8s/xbank.iso -o /opt/xbank.iso

mkdir -p /xbank

mount -o noatime /opt/xbank.iso /xbank

cd /xbank/basic/docker/

sh docker-offline-setup.sh

docker --version

cd /xbank/basic/ansible/

docker load -i centos7.2024.tar

docker run -it -d -p 23245:23245 --name centos7 centos7:7 /bin/bash

docker ps -a | grep centos

- 5.在该机器上,将xbank目录拷贝到容器内(避免容器内mount iso报错)

docker cp /xbank centos7:/

- 注意,以下步骤 登录ansible管理机 均指 登录该centos7容器,测试一下

docker exec -it centos7 /bin/bash

> ip a

> netstat -lnpt

2.2.管理节点 - 搭建 ansible ✔

- 1.登录ansible管理机,使用

ssh-keygen -t rsa 生成ssh免密登录公私钥

cat ~/.ssh/id_rsa.pub | grep ssh-rsa || ssh-keygen -t rsa -P "" -f ~/.ssh/id_rsa

- 2.登录ansible管理机,将秘钥分发至需要被管理的节点,实现免密登录

ssh-copy-id -p 22 root@10.30.3.201

ssh-copy-id -p 22 root@10.30.3.202

ssh-copy-id -p 22 root@10.30.3.203

ssh-copy-id -p 22 root@10.30.3.204

ssh-copy-id -p 22 root@10.30.3.205

ssh-copy-id -p 22 root@10.30.3.206

ssh-copy-id -p 22 root@10.30.3.207

ssh-copy-id -p 22 root@10.30.3.208

- 3.登录ansible管理机,考虑到无网环境,这里centos7容器已封装 ansible 2.9.27(注:先装 epel-release 再装 ansible)

ansible --version

- 4.登录ansible管理机,定义分组名称及受控主机清单

cat > /etc/ansible/hosts <<EOF

[harbor]

10.30.3.201 hostname=harbor ansible_ssh_port='22'

[rancher]

10.30.3.202 hostname=rancher ansible_ssh_port='22'

[k8s_master]

10.30.3.203 hostname=k8s-master-01 ansible_ssh_port='22'

10.30.3.204 hostname=k8s-master-02 ansible_ssh_port='22'

10.30.3.205 hostname=k8s-master-03 ansible_ssh_port='22'

[k8s_worker]

10.30.3.206 hostname=k8s-worker-01 ansible_ssh_port='22'

10.30.3.207 hostname=k8s-worker-02 ansible_ssh_port='22'

10.30.3.208 hostname=k8s-worker-03 ansible_ssh_port='22'

EOF

- 5.登录ansible管理机,针对分组或单个ip验证连通性

sed -i "s/#host_key_checking/host_key_checking/" /etc/ansible/ansible.cfg

ansible harbor,rancher,k8s_master,k8s_worker -m shell -a 'hostname' -o

- 6.登录ansible管理机,安装rsync是为了能在 ansible-playbook 中调用 synchronize 模块(它比copy同步效率高)

cd /xbank/basic/init/rpm/

rpm -qa | grep rsync || rpm -ivh rsync-3.1.2-12.el7_9.x86_64.rpm

2.3.所有节点 - 初始化系统并重启 ✔

- 登录ansible管理机,利用ansible对所有节点执行k8s环境的初始化

cd /xbank/basic/init/

cat /etc/ansible/hosts | grep port | awk '{print $1,$2}' | awk -F"hostname=" '{print $1 $2}' > /opt/.k8s.hosts

ansible-playbook ansible-k8s-init.yml -e "hosts=harbor,rancher,k8s_master,k8s_worker user=root"

- 登录ansible管理机,对所有节点执行重启(特别是禁用selinux后)

ansible harbor,rancher,k8s_master,k8s_worker -m shell -a 'shutdown -r now' -o

ansible harbor -m shell -a 'cat /etc/hosts'

ansible harbor,rancher,k8s_master,k8s_worker -m shell -a 'hostname' -o

2.4.所有节点 - 挂载数据盘 ✔

- 登录ansible管理机,确认所有节点已将数据盘挂载到 /data

ansible harbor,rancher,k8s_master,k8s_worker -m shell -a 'fdisk -l | grep Disk | grep dev | egrep "(sd|vd)"' -o

ansible harbor,rancher,k8s_master,k8s_worker -m shell -a 'df -Th | grep data' -o

2.5.特定节点 - 部署 nfs-server ✔

- 登录ansible管理机,仅为 nfs-server 节点安装 nfs-server 服务,示例 nfs-server 为

10.30.3.201

cd /xbank/basic/init/

nfs_server=10.30.3.201

nfs_folder=/data/nfs-share

ip_prefix=`echo $nfs_server | grep -E -o "([0-9]{1,3}.){3}"`

ansible-playbook ansible-nfs-server.yml -e "hosts=$nfs_server user=root nfs_folder=$nfs_folder ip_prefix=$ip_prefix"

- 登录ansible管理机,确认一下nfs-server状态,示例 nfs-server 为

10.30.3.201

nfs_server=10.30.3.201

ansible $nfs_server -m shell -a 'showmount --exports'

2.6.所有节点 - 挂载 nfs ✔

- 登录ansible管理机,为所有节点挂载nfs,示例 nfs-server 为

10.30.3.201

cd /xbank/basic/init/

nfs_server=10.30.3.201

nfs_folder=/data/nfs-share

ansible-playbook ansible-nfs-client.yml -e "hosts=harbor,rancher,k8s_master,k8s_worker user=root nfs_server=$nfs_server nfs_folder=$nfs_folder"

- 登录ansible管理机,确认所有节点的nfs挂载情况

ansible harbor,rancher,k8s_master,k8s_worker -m shell -a 'df -Th | grep nfs' -o

ansible rancher -m shell -a 'echo $(date +%Y.%m.%d.%H:%M:%S) > /mnt/share/123.txt'

ansible harbor,rancher,k8s_master,k8s_worker -m shell -a 'cat /mnt/share/123.txt' -o

2.7.特定节点 - 部署 ntp-server ✔

- 登录ansible管理机,仅为 ntp-server 节点安装 ntp-server 服务(初始化过程已安装ntp,这里只用配置server),示例 ntp-server 为

10.30.3.201

ntp_server=10.30.3.201

ansible $ntp_server -m shell -a 'cat /etc/ntp.conf | grep "server" | grep "127.127.1.0" || echo "server 127.127.1.0" >> /etc/ntp.conf'

ansible $ntp_server -m shell -a 'systemctl restart ntpd.service'

ntp_server=10.30.3.201

ansible harbor,rancher,k8s_master,k8s_worker -m shell -a "/usr/sbin/ntpdate -u $ntp_server" -o

2.8.所有节点 - 部署 ntp 同步任务 ✔

- 登录ansible管理机,为所有节点创建时间同步任务,示例 ntp-server 为

10.30.3.201

ntp_server=10.30.3.201

ansible harbor,rancher,k8s_master,k8s_worker -m shell -a "crontab -l | grep ntpdate || echo '01 * * * * /usr/sbin/ntpdate -u $ntp_server >/dev/null 2>&1' >> /var/spool/cron/root" -o

- 登录ansible管理机,确认crontab已创建

ansible harbor,rancher,k8s_master,k8s_worker -m shell -a 'crontab -l | grep ntpdate' -o

3.搭建k8s环境

3.1.harbor节点 - 部署 harbor 2.3.4 ✔

- 登录ansible管理机,为 harbor 节点安装 docker ce 24.0.1

cd /xbank/basic/init/

ansible-playbook ansible-install-docker.yml -e "hosts=harbor user=root"

- 登录ansible管理机,为 harbor 节点部署 harbor 2.3.4,预计耗时 00:06:04

cd /xbank/basic/init/

time ansible-playbook ansible-harbor-234.yml -e "hosts=harbor user=root"

ansible harbor -m shell -a 'cat /var/lib/docker/harbor/harbor.yml | grep harbor_admin_password' -o

- 登录harbor控制台,示例 harbor 节点ip为

10.30.3.201

地址:https://10.30.3.201:6443

账号:admin

密码:Admin_123456

cat /etc/hosts | grep fusionfintrade | grep "harbor.fusion" || echo "10.30.3.201 harbor.fusionfintrade.com" >> /etc/hosts

docker pull harbor.fusionfintrade.com:6443/ops/pause:3.8

docker images | grep pause

docker rmi -f $(docker images | grep "pause" | awk '{print $3}')

3.2.rancher节点 - 部署 rancher 2.7.2 ✔

- 第1步,登录ansible管理机,为 rancher 节点安装 docker ce 24.0.1

cd /xbank/basic/init/

ansible-playbook ansible-install-docker.yml -e "hosts=rancher user=root"

- 第2步,登录ansible管理机,为 rancher 节点安装 rancher 2.7.2(master及worker节点不再依赖docker),预计耗时 00:02:12

cd /xbank/basic/init/

time ansible-playbook ansible-rancher-272.yml -e "hosts=rancher user=root"

- 第3步,打开 rancher 控制台,重置admin新的密码,示例 rancher 节点ip为

10.30.3.202

登录地址:https://10.30.3.202:8443

初始账号:admin

初始密码:ansible rancher -m shell -a "docker logs rancher 2>&1 | grep Password" -o

新的密码:Admin_123456

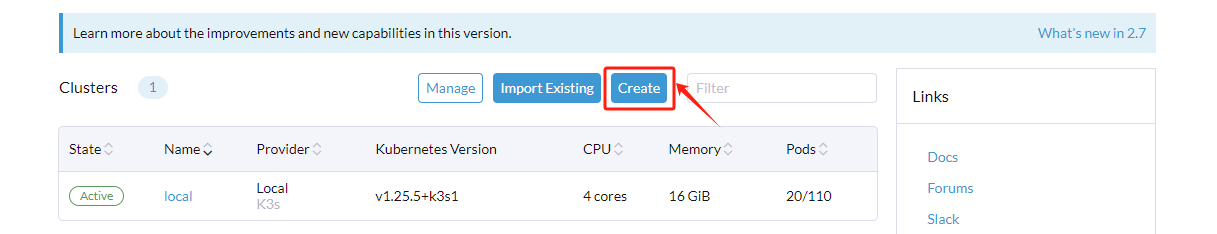

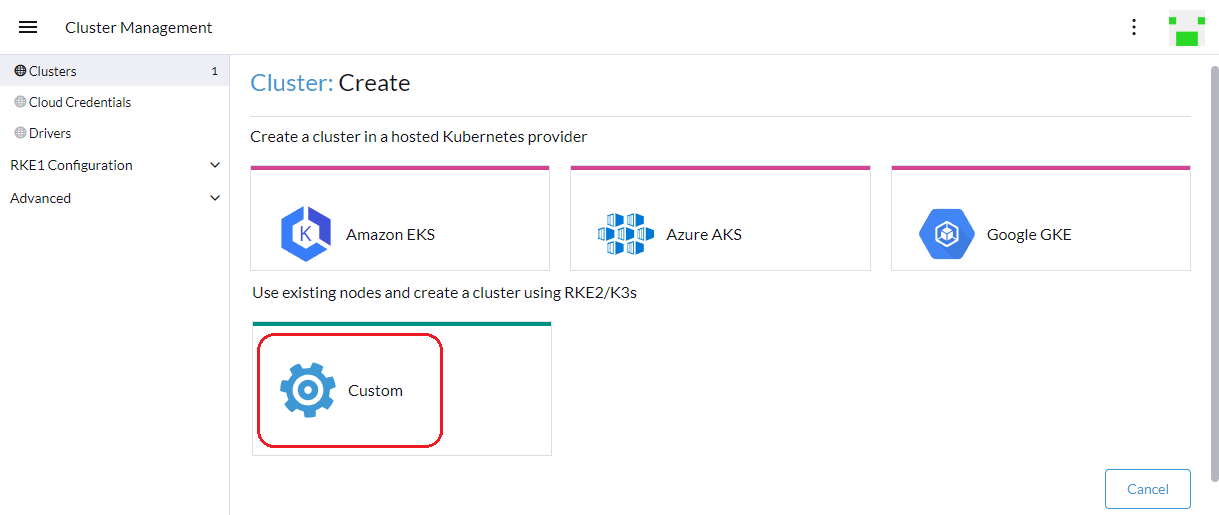

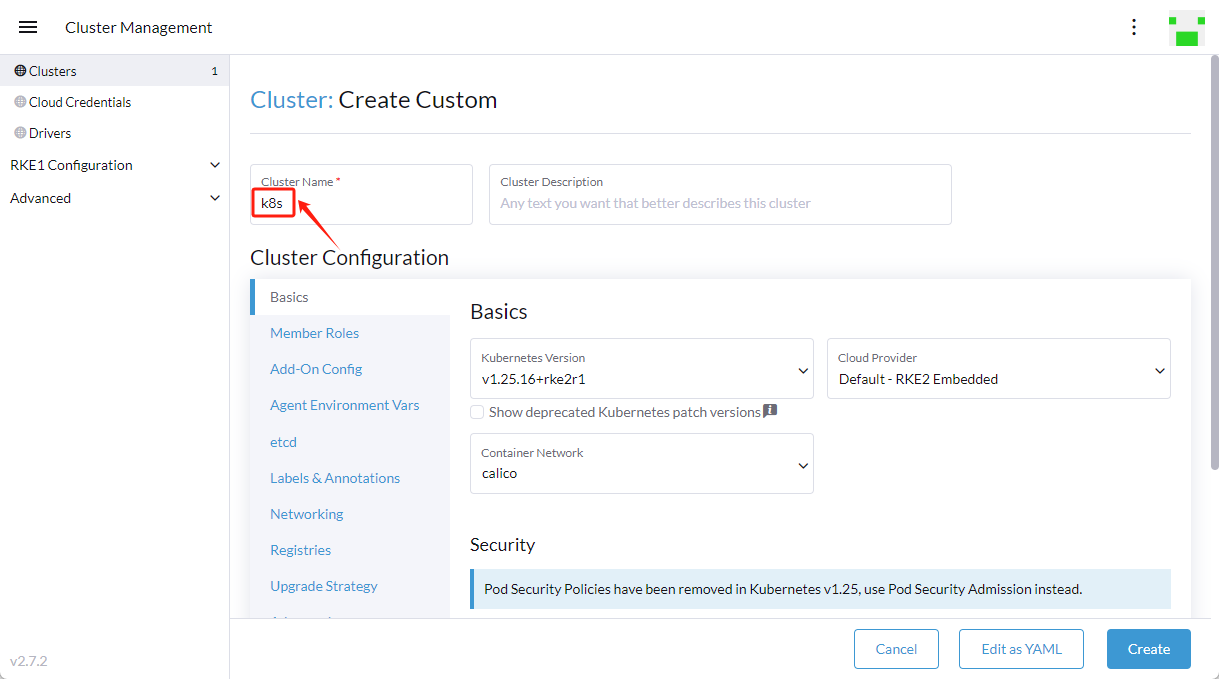

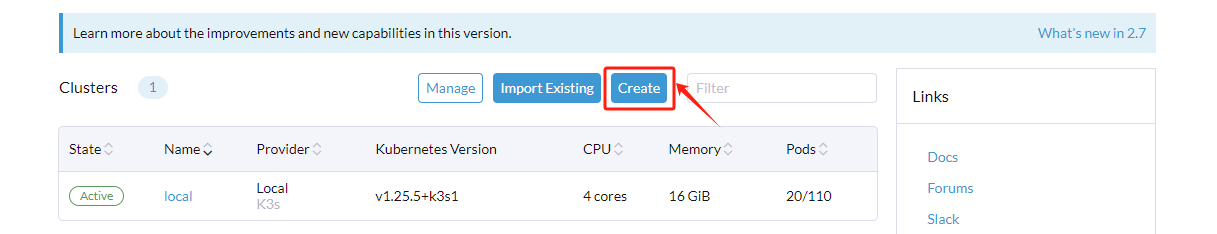

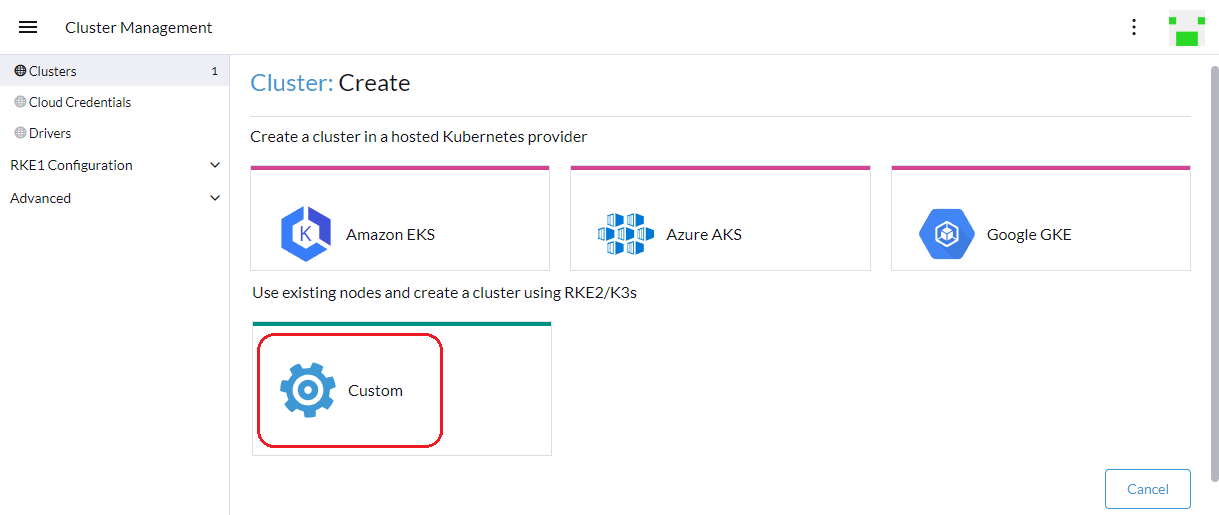

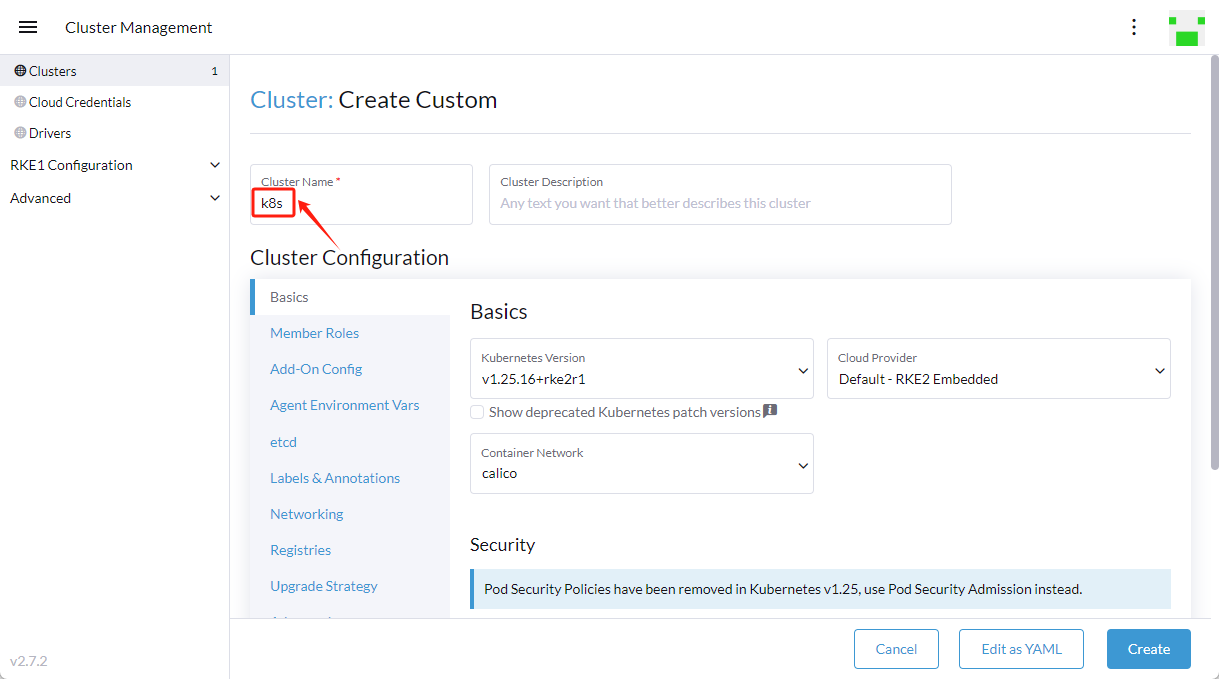

- 第5步,选择

Custom,即:使用现有节点并使用 RKE 创建集群

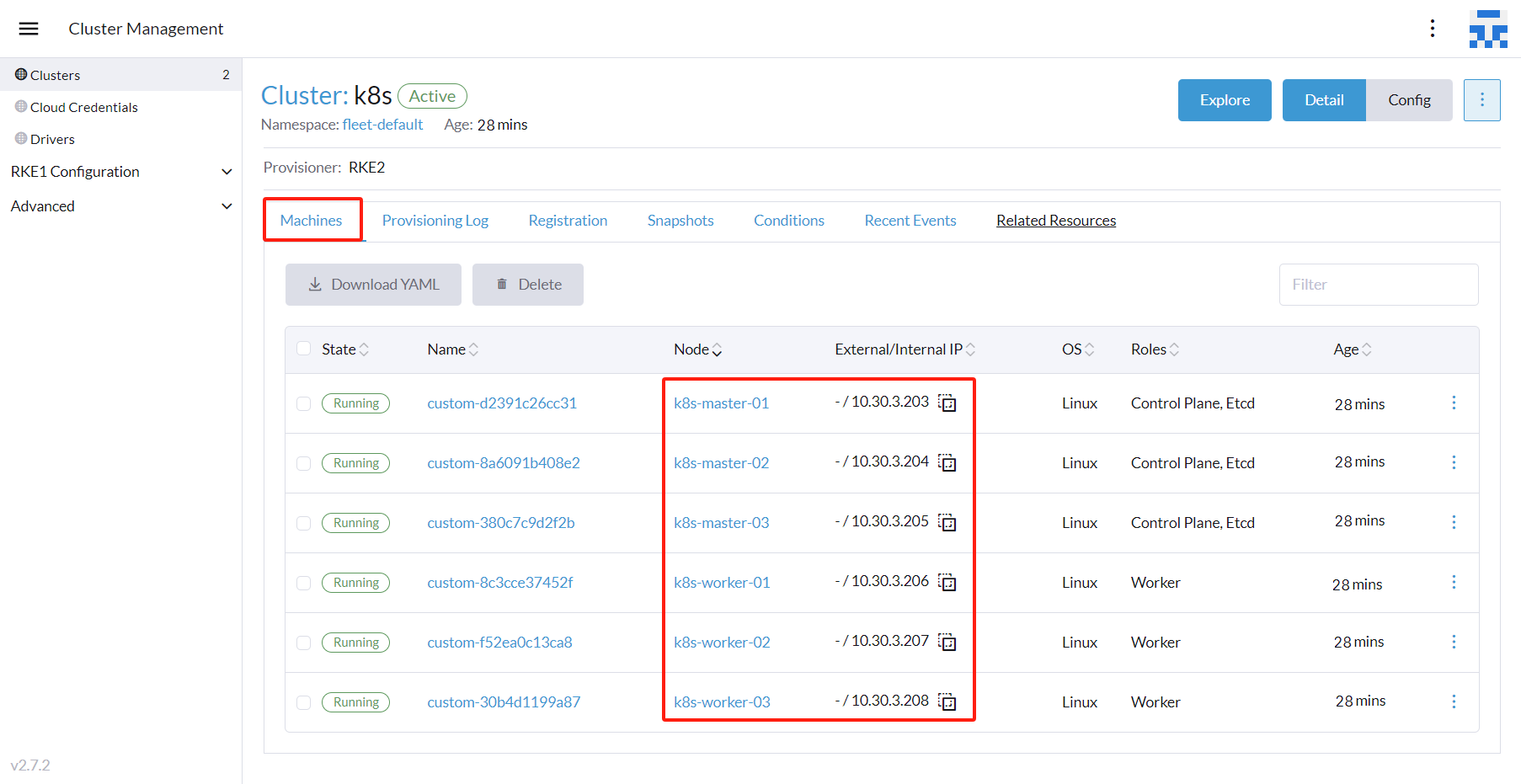

- 第7步,关于角色选择,在 Registation 标签页,3个 Master 节点勾选 etcd + Control Plane,3个 Worker 节点勾选 Worker

- 第8步,复制上一步的命令去相应节点的SSH终端运行,示例如下(不要复制示例代码段):

[root@k8s-master-xx ~]

[root@k8s-worker-xx ~]

- 第9步,待集群初始化完毕(需关闭注意防火墙),确认节点状态,预计耗时 00:28:15

cd /xbank/basic/init/

ansible k8s_master,k8s_worker -m shell -a 'systemctl status firewalld.service | grep Active' -o

ansible k8s_master,k8s_worker -m shell -a 'systemctl status rancher-system-agent.service | grep Active' -o

3.3.rancher节点 - 利用 kubectl 管理k8s ✔

- 第1步,登录ansible管理机,为 master 节点安装 kubectl v1.26.3

cd /xbank/basic/init/

ansible-playbook ansible-k8s-set.yml -e "hosts=k8s_master user=root"

- 第2步,登录 rancher 控制台,在集群首页右上角,点击 Copy KubeConfig to Clipboard,并将内容覆盖到master节点的 ~/.kube/config 中,实现 kubectl 管理k8s资源

mkdir ~/.kube

touch ~/.kube/config

kubectl version --short

kubectl get componentstatuses

kubectl get nodes

3.4.搭建证书 [选做]

- 针对二进制部署k8s,则需要搭建证书(rancher方式部署k8s则不需要证书)

3.5.部署 nginx + lvs 负载均衡 [选做]

- 针对二进制部署k8s,建议对 api-server 部署负载均衡

4.部署业务系统

5.运维手册

5.1.初始账号信息

- 以下是xxx相关服务的初始账号及密码信息,请参考:

| 模块 |

服务名称 |

web地址 |

账号 |

密码 |

备注 |

| 公共 |

harbor控制台 |

https://{harbor}:6443 |

admin |

Admin_123456 |

- |

| 公共 |

rancher控制台 |

https://{rancher}:8443 |

admin |

Admin_123456 |

- |

| - |

minio控制台 |

http://{minio}:9100 |

minioadmin |

fMq4rFTuv_Lm3EaH |

- |

| - |

nacos控制台 |

http://{nacos}:8848/nacos |

nacos |

pFDQqSOhZcH_G3Cs |

nacos管理员 |

| - |

redis |

- |

- |

Admin_147 |

- |

| - |

mysql |

- |

root |

Admin_147 |

- |

5.2.安装目录及启停命令

- 以下是xxx相关服务的安装目录(

$workdir请根据实际情况替换之)及启停脚本,请参考:

| 模块 |

服务名称 |

安装目录 |

启动命令 |

停止命令 |

| - |

mysql |

$workdir/mysql_3306 |

sh start.sh |

sh stop.sh |

| - |

minio |

$workdir/minio_9000 |

sh start.sh |

sh stop.sh |

5.3.健康检查接口

| 模块 |

服务名称 |

接口地址 |

方法 |

检查策略 |

| - |

xxl-job |

http://{xxljob}:7777/xxl-job-admin/actuator/health |

GET |

返回值包含DOWN则为异常 |

| - |

nacos |

http://{nacos}:8848/nacos/actuator/health |

GET |

返回值包含DOWN则为异常 |

| - |

minio |

http://{minio}:9000/minio/health/live |

GET |

HTTP状态值非200则为异常 |

6.附录

6.1.回退方案

- ansible回退:登录 ansible 管理机,先删除所有节点的密钥文件(取消免密登录),再卸载ansible服务

ansible harbor,rancher,k8s_master,k8s_worker -m shell -a 'rm -f /root/.ssh/authorized_keys' -o

echo > /etc/ansible/hosts

echo > /root/.ssh/known_hosts

yum remove -y -q ansible

docker stop $(docker ps -a -q)

docker rm $(docker ps -a -q)

docker rmi -f $(docker images | awk "NR>1 {print $3}")

rm -rf /opt/xbank/harbor

rm -rf /var/lib/docker/harbor

6.2.执行ansible遇到的问题

- 报错1:执行ansible任务报错,添加 -vvvv 看到报错关键字 Read-only file system,进入目标机器执行 echo 123 > 123.txt 发现果然该机已进入只读模式

[root@ansible ~]

10.30.3.206 | UNREACHABLE!: Failed to create temporary directory.In some cases, you may have been able to authenticate and did not have permissions on the target directory.

mkdir: cannot create directory ‘/root/.ansible/tmp/ansible-tmp-1706695222.77-15907-53764453967055’: Read-only file system

- 报错2:执行nfs挂载报错 requested NFS version or transport protocol is not supported,调整重启顺序(初始化 -> 重启 -> 挂载nfs),同时确认已安装 nfs-utils

[root@rancher $]

mount.nfs: requested NFS version or transport protocol is not supported

- 报错3:执行ansible任务报错,然后登陆nfs-server节点查看服务报错 clnt_create: RPC: Program not registered,解决办法是:重启rpcbind和nfs(注意顺序)

[root@ansible ~]

10.30.3.201 | FAILED | rc=1 >>

clnt_create: RPC: Program not registerednon-zero return code

[root@nfs-server ~]

clnt_create: RPC: Program not registered

[root@nfs-server ~]

[root@nfs-server ~]

[root@nfs-server ~]

Export list for harbor:

/data/nfs-share 10.30.3.0/24

- 报错4:执行ansible任务报错 rsync: connection unexpectedly closed,其中涉及到synchronize操作(实际为rsync),解决办法是:目标节点的rsync服务未安装

FAILED! => {"changed": false, "cmd": "/usr/bin/rsync ...", "msg": "Warning: Permanently added '10.30.3.205' (ECDSA) to the list of known hosts.\r\nrsync: connection unexpectedly closed

- 报错5未解:执行 docker pull 报错 failed to verify certificate: x509: certificate signed by unknown authority

[root@3.231 ~]

Error response from daemon: Get "https://harbor.fusionfintrade.com:6443/v2/": tls: failed to verify certificate: x509: certificate signed by unknown authority

6.3.手动部署harbor

- 若通过ansible部署harbor失败,可登陆harbor服务器通过如下方式部署;

cd /opt/xbank/harbor

tar -xvf harbor20231129.tar.gz --directory=/var/lib/docker

cd /var/lib/docker/harbor/

sh install.sh

rm -f /var/lib/docker/harbor/volume/secret/cert/*

\cp /opt/xbank/harbor/cert/harbor.fusionfintrade.com.crt /var/lib/docker/harbor/volume/secret/cert/server.crt

\cp /opt/xbank/harbor/cert/harbor.fusionfintrade.com.key /var/lib/docker/harbor/volume/secret/cert/server.key

docker restart nginx