mongodb分片集群搭建

[TOC]

1.准备工作

1.1.资源规划

- 机器配置清单

| 节点规划 | 节点ip地址 | 节点硬件配置 | 备注 |

|---|---|---|---|

| 节点1 | 192.168.31.101 | 4C-32G-300G | 主节点 |

| 节点2 | 192.168.31.102 | 4C-32G-300G | 候选主节点,建议与主节点的配置保持一致 |

| 节点3 | 192.168.31.103 | 2C-16G-100G | 不提供数据服务,配置可以稍差一些 |

- 机器规划清单

| 集群角色 | 节点清单 | 配置文件 |

|---|---|---|

| mongos路由集 | 节点1:3715、节点2:3715 | /data/mongo_3715/mongod_3715.conf |

| congfig配置集 | 节点1:3716、节点2:3716、节点3:3716 | /data/mongo_3716/mongod_3716.conf |

| shard分片集群1 | 节点1:3717、节点2:3717、节点3:3717 | /data/mongo_3717/mongod_3717.conf |

| shard分片集群2 | 节点2:3718、节点1:3718、节点3:3718 | /data/mongo_3718/mongod_3718.conf |

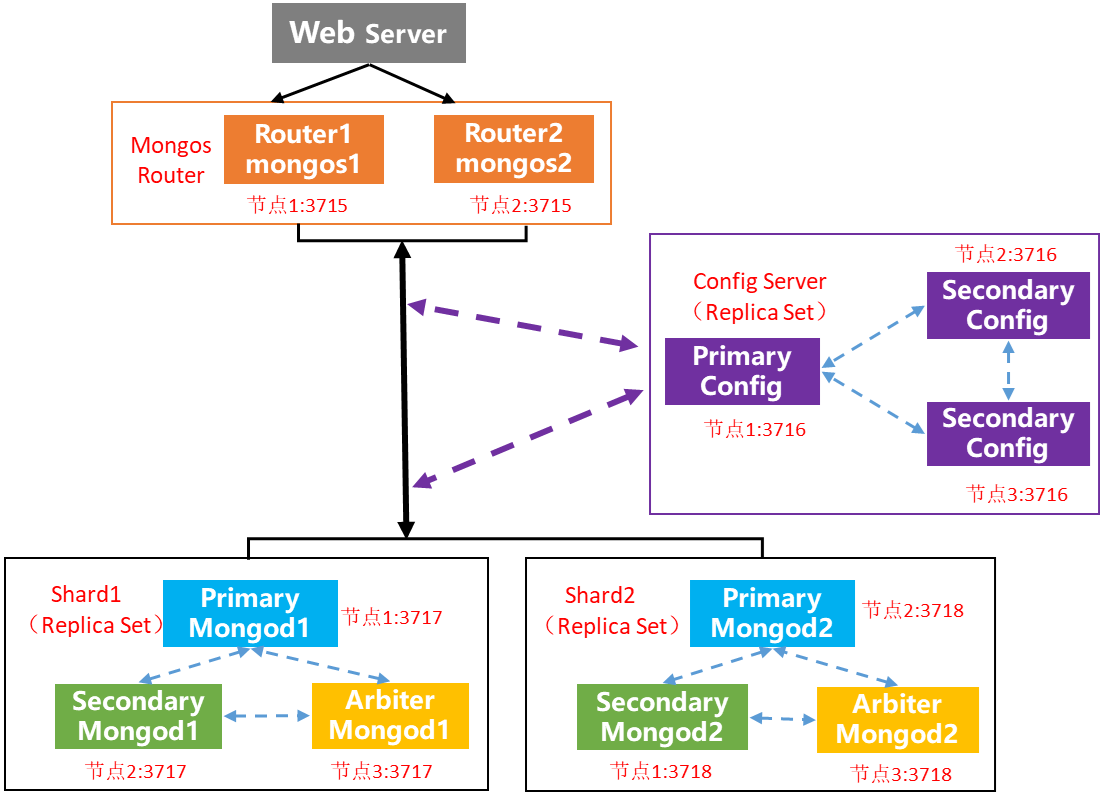

- 分片集群架构图

1.2.环境初始化

- 从 mongodb 5.0 开始要求CPU必须支持AVX指令集,执行如下命令若无返回则表示不支持adx

cat /proc/cpuinfo | grep avx

- 在root用户下,根据实际情况修改安装过程要用到的环境参数

#.示例.定义3个node节点

node1=192.168.31.101

node2=192.168.31.102

node3=192.168.31.103

#.假如有更多节点,直接修改下面的配置文件(注意shard1的主节点为node1,而shard2的主节点为node2)

cat > /opt/.mongo.config <<EOF

workdir=/data

mongo_user=dba_admin

mongo_pass=1_yyJnwRD48CbSql

mongos1_master=$node1

mongos2_master=$node2

config_master=$node1

config_slave2=$node2

config_slave3=$node3

shard1_master=$node1

shard1_slave2=$node2

shard1_slave3=$node3

shard2_master=$node2

shard2_slave2=$node1

shard2_slave3=$node3

mongos_port=3717

config_port=3718

shard1_port=3701

shard2_port=3702

EOF

- 所有节点:系统初始化(可重复执行)

#.set timezone

cat /etc/resolv.conf | grep "^nameserver" || echo "nameserver 223.5.5.5" > /etc/resolv.conf

timedatectl set-timezone Asia/Shanghai

#.set ulimit

ulimit -n 65536

cat /etc/security/limits.conf | grep nofile | grep 65536 || echo "* - nofile 65536" >> /etc/security/limits.conf

cat /etc/rc.local | grep ulimit | grep 65536 || echo "ulimit -n 65536" >> /etc/rc.local

#.disable hugepage

echo never > /sys/kernel/mm/transparent_hugepage/enabled

echo never > /sys/kernel/mm/transparent_hugepage/defrag

#.set vm.max_map_count

cat /etc/sysctl.conf | grep max_map_count || echo "vm.max_map_count=655360" >> /etc/sysctl.conf

/sbin/sysctl -p /etc/sysctl.conf

#.disable iptables

/usr/sbin/iptables -F

systemctl stop firewalld.service

systemctl disable firewalld.service

systemctl status firewalld.service

1.3.准备 mongodb 配置文件

- 所有节点:创建配置文件

#.配置文件范例

cat > /tmp/mongo_config_tmp.txt <<EOF

quiet=false

timeStampFormat=iso8601-local

logappend=true

journal=true

#auth=true

directoryperdb=true

fork=true

oplogSize=102400

#replSet=set27017

port=27017

bind_ip=0.0.0.0

profile=1

slowms=500

maxConns=8000

cpu=true

storageEngine=wiredTiger

wiredTigerCacheSizeGB=my_MemTotle_GB_80

wiredTigerDirectoryForIndexes=true

wiredTigerIndexPrefixCompression=true

wiredTigerJournalCompressor=zlib

wiredTigerCollectionBlockCompressor=snappy

dbpath=my_mongo_data/mongo_27017/data

#keyFile=my_mongo_data/mongo_27017/data/keyfile

pidfilepath=my_mongo_data/mongo_27017/pid/mongod.pid

logpath=my_mongo_data/mongo_27017/log/mongod.log

EOF

#.添加login范例

cat > /tmp/mongo_add_login.txt <<EOF

use admin;

db.createUser({user:'rwuser',pwd:'rIFz_1Se4B7pBK6Z',roles:['root']},{w:'majority',wtimeout:5000});

db.createUser({user:'dba_admin',pwd:'1_yyJnwRD48CbSql',roles:['root']},{w:'majority',wtimeout:5000});

exit;

EOF

#.设置wiredTigerCacheSizeGB为物理内存的40%

MemTotle_GB=`awk '($1 == "MemTotal:"){print $2/1024/1024}' /proc/meminfo`

MemTotle_GB_80=`echo $MemTotle_GB | awk -F"." '{print $1*0.4}' | awk -F"." '{print $1}'`

if [[ $MemTotle_GB_80 -lt 1 ]]; then MemTotle_GB_80="1"; fi

sed -i "s/my_MemTotle_GB_80/${MemTotle_GB_80}/" /tmp/mongo_config_tmp.txt

cat /tmp/mongo_config_tmp.txt | grep wiredTigerCacheSizeGB

1.4.准备 mongodb 安装文件

- 所有节点:下载安装文件

cd /opt/

curl -L http://iso.sqlfans.cn/linux/numactl-2.0.12-5.el7.x86_64.rpm -o /opt/numactl-2.0.12-5.el7.x86_64.rpm

curl -L http://iso.sqlfans.cn/linux/openssl-1.0.2k-26.el7_9.x86_64.rpm -o /opt/openssl-1.0.2k-26.el7_9.x86_64.rpm

curl -L http://iso.sqlfans.cn/mongodb/mongodb-linux-x86_64-rhel70-6.0.6.tgz -o /opt/mongodb-linux-x86_64-rhel70-6.0.6.tgz

curl -L http://iso.sqlfans.cn/mongodb/mongosh-1.6.0-linux-x64.tgz -o /opt/mongosh-1.6.0-linux-x64.tgz

- 所有节点:创建用户,安装基础依赖(可重复执行)

#.创建用户

cat /etc/group | grep mongodb || groupadd mongodb

cat /etc/passwd | grep mongodb || useradd mongodb -g mongodb -s /usr/sbin/nologin

#.安装基础依赖

if [ `rpm -qa numactl | wc -l` -eq 0 ];then rpm -ivh /opt/numactl-2.0.12-5.el7.x86_64.rpm > /dev/null ; fi

if [ `rpm -qa openssl | wc -l` -eq 0 ];then rpm -ivh /opt/openssl-1.0.2k-26.el7_9.x86_64.rpm > /dev/null ; fi

- 所有节点:解压文件 mongodb 6.0.6(可重复执行)

cd /opt/

rm -rf /usr/local/mongodb6

tar -xvf mongodb-linux-x86_64-rhel70-6.0.6.tgz -C /usr/local > /dev/null

mv /usr/local/mongodb-linux-x86_64-rhel70-6.0.6 /usr/local/mongodb6

tar -xvf mongosh-1.6.0-linux-x64.tgz > /dev/null

mv mongosh-1.6.0-linux-x64/bin/mongosh /usr/local/mongodb6/bin/

rm -rf mongosh-1.6.0-linux-x64

ln -sv /usr/local/mongodb6/bin/mongosh /usr/bin/

chown -R mongodb:mongodb /usr/local/mongodb6

1.5.准备 mongodb 密钥文件

- 任一节点:利用 openssl 创建密钥文件,作为集群统一的密钥文件(建议将其存放到共享目录以便其他节点快速拉取)

openssl rand -base64 741 > /opt/keyfile_2021_tmp.txt

#.共享:curl -sL http://iso.sqlfans.cn/mongodb/config/keyfile_2021_tmp.txt

2.安装mongodb分片集群

2.1.搭建第一个shard分片集

- 所有节点:启动 mongod 服务

#.获取配置文件中的参数

source /opt/.mongo.config

#.修改配置文件

mkdir -p ${workdir}/mongo_shard_${shard1_port}/{data,log,pid}

curl -sL http://iso.sqlfans.cn/mongodb/config/keyfile_2021_tmp.txt -o ${workdir}/mongo_shard_${shard1_port}/data/keyfile

chmod 600 ${workdir}/mongo_shard_${shard1_port}/data/keyfile

cat /tmp/mongo_config_tmp.txt > ${workdir}/mongo_shard_${shard1_port}/mongod_${shard1_port}.conf

sed -i 's/#replSet/replSet/g' ${workdir}/mongo_shard_${shard1_port}/mongod_${shard1_port}.conf

sed -i "s/27017/${shard1_port}/" ${workdir}/mongo_shard_${shard1_port}/mongod_${shard1_port}.conf

sed -i "s:my_mongo_data:${workdir}:g" ${workdir}/mongo_shard_${shard1_port}/mongod_${shard1_port}.conf

sed -i "s/mongo_${shard1_port}/mongo_shard_${shard1_port}/" ${workdir}/mongo_shard_${shard1_port}/mongod_${shard1_port}.conf

chown -R mongodb.mongodb ${workdir}/mongo_shard_${shard1_port}

#.添加分片集的特有参数

sed -i '/shardsvr/d' ${workdir}/mongo_shard_${shard1_port}/mongod_${shard1_port}.conf

echo "shardsvr=true" >> ${workdir}/mongo_shard_${shard1_port}/mongod_${shard1_port}.conf

cat ${workdir}/mongo_shard_${shard1_port}/mongod_${shard1_port}.conf | egrep "(port|bind_ip|path|auth|replSet|keyFile|shardsvr)"

#.启动服务

sudo -u mongodb numactl --interleave=all /usr/local/mongodb6/bin/mongod -f ${workdir}/mongo_shard_${shard1_port}/mongod_${shard1_port}.conf

#.登录测试

# echo "db.version();" | mongosh --host 127.0.0.1 --port ${shard1_port}

#.添加到开机启动

cat /etc/rc.local | grep ${shard1_port} || echo "sudo -u mongodb numactl --interleave=all /usr/local/mongodb6/bin/mongod -f ${workdir}/mongo_shard_${shard1_port}/mongod_${shard1_port}.conf" >> /etc/rc.local

- 任一节点:创建副本集初始化脚本(将master节点的优先级调到最高,示例为20,以防止初始化之后该节点变为Secondary)

cat > /tmp/mongo_shard1_init.txt <<EOF

use admin;

config = {_id: 'set${shard1_port}', members: [

{_id: 0, host: '${shard1_master}:${shard1_port}', priority: 20},

{_id: 1, host: '${shard1_slave2}:${shard1_port}', priority: 15},

{_id: 2, host: '${shard1_slave3}:${shard1_port}', priority: 0, arbiterOnly: true}]

};

rs.initiate(config);

rs.status();

exit;

EOF

- 任一节点:执行集群初始化,并导入创建账号的脚本

#.执行集群初始化

mongosh --host ${shard1_master} --port ${shard1_port} admin < /tmp/mongo_shard1_init.txt

#.等待初始化完成,空集群预计耗时5秒

sleep 10;

#.导入创建账号的脚本

mongosh --host ${shard1_master} --port ${shard1_port} admin < /tmp/mongo_add_login.txt

echo "show users;" | mongosh --host ${shard1_master} --port ${shard1_port} admin

- 所有节点:启用密码登录

#.启用密码登录

sed -i 's/#auth/auth/g' ${workdir}/mongo_shard_${shard1_port}/mongod_${shard1_port}.conf

sed -i 's/#keyFile/keyFile/g' ${workdir}/mongo_shard_${shard1_port}/mongod_${shard1_port}.conf

sed -i 's/#replSet/replSet/g' ${workdir}/mongo_shard_${shard1_port}/mongod_${shard1_port}.conf

cat ${workdir}/mongo_shard_${shard1_port}/mongod_${shard1_port}.conf | egrep "(auth|keyFile|replSet)"

#.重启服务以生效

ps aux | grep mongod | grep ${shard1_port} | grep -v grep | awk '{print $2}' | xargs kill -9 2> /dev/null

sudo -u mongodb numactl --interleave=all /usr/local/mongodb6/bin/mongod -f ${workdir}/mongo_shard_${shard1_port}/mongod_${shard1_port}.conf

- 任一节点:确认密码登录已启用

sleep 10;

echo "rs.isMaster();" | mongosh -u ${mongo_user} -p ${mongo_pass} --authenticationDatabase admin --host ${shard1_slave3} --port ${shard1_port} admin

2.2.搭建第二个shard分片集

- 所有节点:启动 mongod 服务

#.获取配置文件中的参数

source /opt/.mongo.config

#.修改配置文件

mkdir -p ${workdir}/mongo_shard_${shard2_port}/{data,log,pid}

curl -sL http://iso.sqlfans.cn/mongodb/config/keyfile_2021_tmp.txt -o ${workdir}/mongo_shard_${shard2_port}/data/keyfile

chmod 600 ${workdir}/mongo_shard_${shard2_port}/data/keyfile

cat /tmp/mongo_config_tmp.txt > ${workdir}/mongo_shard_${shard2_port}/mongod_${shard2_port}.conf

sed -i 's/#replSet/replSet/g' ${workdir}/mongo_shard_${shard2_port}/mongod_${shard2_port}.conf

sed -i "s/27017/${shard2_port}/" ${workdir}/mongo_shard_${shard2_port}/mongod_${shard2_port}.conf

sed -i "s:my_mongo_data:${workdir}:g" ${workdir}/mongo_shard_${shard2_port}/mongod_${shard2_port}.conf

sed -i "s/mongo_${shard2_port}/mongo_shard_${shard2_port}/" ${workdir}/mongo_shard_${shard2_port}/mongod_${shard2_port}.conf

chown -R mongodb.mongodb ${workdir}/mongo_shard_${shard2_port}

#.添加分片集的特有参数

sed -i '/shardsvr/d' ${workdir}/mongo_shard_${shard2_port}/mongod_${shard2_port}.conf

echo "shardsvr=true" >> ${workdir}/mongo_shard_${shard2_port}/mongod_${shard2_port}.conf

cat ${workdir}/mongo_shard_${shard2_port}/mongod_${shard2_port}.conf | egrep "(port|bind_ip|path|auth|replSet|keyFile|shardsvr)"

#.启动服务

sudo -u mongodb numactl --interleave=all /usr/local/mongodb6/bin/mongod -f ${workdir}/mongo_shard_${shard2_port}/mongod_${shard2_port}.conf

#.登录测试

# echo "db.version();" | mongosh --host 127.0.0.1 --port ${shard2_port}

#.添加到开机启动

cat /etc/rc.local | grep ${shard2_port} || echo "sudo -u mongodb numactl --interleave=all /usr/local/mongodb6/bin/mongod -f ${workdir}/mongo_shard_${shard2_port}/mongod_${shard2_port}.conf" >> /etc/rc.local

- 任一节点:创建副本集初始化脚本(将master节点的优先级调到最高,示例为20,以防止初始化之后该节点变为Secondary)

cat > /tmp/mongo_shard2_init.txt <<EOF

use admin;

config = {_id: 'set${shard2_port}', members: [

{_id: 0, host: '${shard2_master}:${shard2_port}', priority: 20},

{_id: 1, host: '${shard2_slave2}:${shard2_port}', priority: 15},

{_id: 2, host: '${shard2_slave3}:${shard2_port}', priority: 0, arbiterOnly: true}]

};

rs.initiate(config);

rs.status();

exit;

EOF

- 任一节点:执行集群初始化,并导入创建账号的脚本

#.执行集群初始化

mongosh --host ${shard2_master} --port ${shard2_port} admin < /tmp/mongo_shard2_init.txt

#.等待初始化完成,空集群预计耗时5秒

sleep 10;

#.导入创建账号的脚本

mongosh --host ${shard2_master} --port ${shard2_port} admin < /tmp/mongo_add_login.txt

echo "show users;" | mongosh --host ${shard2_master} --port ${shard2_port} admin

- 所有节点:启用密码登录

#.启用密码登录

sed -i 's/#auth/auth/g' ${workdir}/mongo_shard_${shard2_port}/mongod_${shard2_port}.conf

sed -i 's/#keyFile/keyFile/g' ${workdir}/mongo_shard_${shard2_port}/mongod_${shard2_port}.conf

sed -i 's/#replSet/replSet/g' ${workdir}/mongo_shard_${shard2_port}/mongod_${shard2_port}.conf

cat ${workdir}/mongo_shard_${shard2_port}/mongod_${shard2_port}.conf | egrep "(auth|keyFile|replSet)"

#.重启服务以生效

ps aux | grep mongod | grep ${shard2_port} | grep -v grep | awk '{print $2}' | xargs kill -9 2> /dev/null

sudo -u mongodb numactl --interleave=all /usr/local/mongodb6/bin/mongod -f ${workdir}/mongo_shard_${shard2_port}/mongod_${shard2_port}.conf

- 任一节点:确认密码登录已启用

sleep 10;

echo "rs.isMaster();" | mongosh -u ${mongo_user} -p ${mongo_pass} --authenticationDatabase admin --host ${shard2_slave3} --port ${shard2_port} admin

2.3.搭建 congfig 配置集

- 所有节点:启动 mongod 服务

#.获取配置文件中的参数

source /opt/.mongo.config

#.修改配置文件

mkdir -p ${workdir}/mongo_config_${config_port}/{data,log,pid}

curl -sL http://iso.sqlfans.cn/mongodb/config/keyfile_2021_tmp.txt -o ${workdir}/mongo_config_${config_port}/data/keyfile

chmod 600 ${workdir}/mongo_config_${config_port}/data/keyfile

cat /tmp/mongo_config_tmp.txt > ${workdir}/mongo_config_${config_port}/mongod_${config_port}.conf

sed -i 's/#replSet/replSet/g' ${workdir}/mongo_config_${config_port}/mongod_${config_port}.conf

sed -i "s/27017/${config_port}/" ${workdir}/mongo_config_${config_port}/mongod_${config_port}.conf

sed -i "s:my_mongo_data:${workdir}:g" ${workdir}/mongo_config_${config_port}/mongod_${config_port}.conf

sed -i "s/mongo_${config_port}/mongo_config_${config_port}/" ${workdir}/mongo_config_${config_port}/mongod_${config_port}.conf

chown -R mongodb.mongodb ${workdir}/mongo_config_${config_port}

#.添加分片集的特有参数

sed -i '/configsvr/d' ${workdir}/mongo_config_${config_port}/mongod_${config_port}.conf

echo "configsvr=true" >> ${workdir}/mongo_config_${config_port}/mongod_${config_port}.conf

cat ${workdir}/mongo_config_${config_port}/mongod_${config_port}.conf | egrep "(port|bind_ip|path|auth|replSet|keyFile|configsvr)"

#.启动服务

sudo -u mongodb numactl --interleave=all /usr/local/mongodb6/bin/mongod -f ${workdir}/mongo_config_${config_port}/mongod_${config_port}.conf

#.登录测试

# echo "db.version();" | mongosh --host 127.0.0.1 --port ${config_port}

#.添加到开机启动

cat /etc/rc.local | grep ${config_port} || echo "sudo -u mongodb numactl --interleave=all /usr/local/mongodb6/bin/mongod -f ${workdir}/mongo_config_${config_port}/mongod_${config_port}.conf" >> /etc/rc.local

- 任一节点:创建副本集初始化脚本(将master节点的优先级调到最高,示例为20,以防止初始化之后该节点变为Secondary)

cat > /tmp/mongo_config_init.txt <<EOF

use admin;

config = {_id: 'set${config_port}', members: [

{_id: 0, host: '${config_master}:${config_port}', priority: 20},

{_id: 1, host: '${config_slave2}:${config_port}', priority: 15},

{_id: 2, host: '${config_slave3}:${config_port}', priority: 10}]

};

rs.initiate(config);

rs.status();

exit;

EOF

- 任一节点:执行集群初始化,并导入创建账号的脚本

#.执行集群初始化

mongosh --host ${config_master} --port ${config_port} admin < /tmp/mongo_config_init.txt

#.等待初始化完成,空集群预计耗时5秒

sleep 10;

#.导入创建账号的脚本

mongosh --host ${config_master} --port ${config_port} admin < /tmp/mongo_add_login.txt

echo "show users;" | mongosh --host ${config_master} --port ${config_port} admin

- 所有节点:启用密码登录

#.启用密码登录

sed -i 's/#auth/auth/g' ${workdir}/mongo_config_${config_port}/mongod_${config_port}.conf

sed -i 's/#keyFile/keyFile/g' ${workdir}/mongo_config_${config_port}/mongod_${config_port}.conf

sed -i 's/#replSet/replSet/g' ${workdir}/mongo_config_${config_port}/mongod_${config_port}.conf

cat ${workdir}/mongo_config_${config_port}/mongod_${config_port}.conf | egrep "(auth|keyFile|replSet)"

#.重启服务以生效

ps aux | grep mongod | grep ${config_port} | grep -v grep | awk '{print $2}' | xargs kill -9 2> /dev/null

sudo -u mongodb numactl --interleave=all /usr/local/mongodb6/bin/mongod -f ${workdir}/mongo_config_${config_port}/mongod_${config_port}.conf

- 任一节点:确认密码登录已启用

sleep 10;

echo "rs.isMaster();" | mongosh -u ${mongo_user} -p ${mongo_pass} --authenticationDatabase admin --host ${config_slave3} --port ${config_port} admin

2.4.搭建第一个 mongos 路由

- 第一个mongos路由节点:启动 mongos 服务

#.获取配置文件中的参数

source /opt/.mongo.config

#.修改配置文件

mkdir -p ${workdir}/mongo_router_${mongos_port}/{data,log,pid}

curl -sL http://iso.sqlfans.cn/mongodb/config/keyfile_2021_tmp.txt -o ${workdir}/mongo_router_${mongos_port}/data/keyfile

chmod 600 ${workdir}/mongo_router_${mongos_port}/data/keyfile

#.添加mongos的特有参数

cat > ${workdir}/mongo_router_${mongos_port}/mongod_${mongos_port}.conf <<EOF

quiet=false

timeStampFormat=iso8601-local

logappend=true

fork=true

port=${mongos_port}

bind_ip=0.0.0.0

slowms=500

maxConns=8000

keyFile=${workdir}/mongo_router_${mongos_port}/data/keyfile

pidfilepath=${workdir}/mongo_router_${mongos_port}/pid/mongod.pid

logpath=${workdir}/mongo_router_${mongos_port}/log/mongod.log

configdb=set${config_port}/${config_master}:${config_port},${config_slave2}:${config_port},${config_slave3}:${config_port}

EOF

#.用mongos启动服务(不是mongod哦)

chown -R mongodb.mongodb ${workdir}/mongo_router_${mongos_port}

sudo -u mongodb numactl --interleave=all /usr/local/mongodb6/bin/mongos -f ${workdir}/mongo_router_${mongos_port}/mongod_${mongos_port}.conf

#.添加到开机启动

cat /etc/rc.local | grep ${mongos_port} || echo "sudo -u mongodb numactl --interleave=all /usr/local/mongodb6/bin/mongos -f ${workdir}/mongo_router_${mongos_port}/mongod_${mongos_port}.conf" >> /etc/rc.local

2.5.通过路由添加分片配置

- 登录mongos节点:创建mongos分片初始化脚本,示例创建了2个分片分别对应2个shard复制集

cat > /tmp/mongo_mongos_init.txt <<EOF

use admin;

db.adminCommand({"setDefaultRWConcern":1,"defaultWriteConcern":{"w":1}});

sh.addShard("set${shard1_port}/${shard1_master}:${shard1_port},${shard1_slave2}:${shard1_port},${shard1_slave3}:${shard1_port}");

sh.addShard("set${shard2_port}/${shard2_master}:${shard2_port},${shard2_slave2}:${shard2_port},${shard2_slave3}:${shard2_port}");

sh.status();

exit;

EOF

- 登录mongos节点:执行分片初始化

#.执行分片初始化

mongosh -u ${mongo_user} -p ${mongo_pass} --authenticationDatabase admin --host ${mongos1_master} --port ${mongos_port} admin < /tmp/mongo_mongos_init.txt

- 登录mongos节点:确认分片情况,附

sh.status()部分返回如下:

[direct: mongos] admin> sh.status();

shards

[

{

_id: 'set3701',

host: 'set3701/192.168.31.101:3701,192.168.31.102:3701',

state: 1,

topologyTime: Timestamp({ t: 1726828436, i: 4 })

},

{

_id: 'set3702',

host: 'set3702/192.168.31.101:3702,192.168.31.102:3702',

state: 1,

topologyTime: Timestamp({ t: 1726828440, i: 3 })

}

]

- 登录mongos节点:切换到admin数据库,先激活数据库,再根据策略对collection进行分片,最后写入数据

注:如果集合已经包含数据,则必须先创建支持分片键的索引,然后才能对集合进行分片。如果集合为空,MongoDB 会将该索引创建为 sh.shardCollection() 的一部分。

#.登录到mongos节点(登录到shard节点则无效)

mongosh -u ${mongo_user} -p ${mongo_pass} --authenticationDatabase admin --host ${mongos1_master} --port ${mongos_port} admin

#.针对分片策略–哈希分片,示例按照name进行哈希分片

use admin;

sh.enableSharding("db1")

sh.shardCollection("db1.aaa",{"name":"hashed"})

#.针对分片策略–范围分片,示例按照age进行范围分片

use admin;

sh.enableSharding("db1")

sh.shardCollection("db1.bbb",{"age":1})

#.查看整个集群的分片情况

use admin;

db.printShardingStatus();

#.显示某个collection的分片信息

use db1;

db.aaa.stats();

db.bbb.stats();

#.批量写入1000行

use db1;

for(var i=1;i<=1000;i++){db.aaa.insert({"name":"user"+i,"created_at":new Date()});};

for(var i=1;i<=1000;i++){db.bbb.insert({"name":"user"+i,"age":NumberInt(i%120)})}

- 登录shard主节点:分别登录2个shard分片,确认2个shard总的行数

#.登录shard1主节点:确认aaa有518行、bbb有1000行

echo "db.aaa.countDocuments();" | mongosh -u ${mongo_user} -p ${mongo_pass} --authenticationDatabase admin --host ${shard1_master} --port ${shard1_port} db1

echo "db.bbb.countDocuments();" | mongosh -u ${mongo_user} -p ${mongo_pass} --authenticationDatabase admin --host ${shard1_master} --port ${shard1_port} db1

#.登录shard2主节点:确认aaa有482行、bbb有0行

echo "db.aaa.countDocuments();" | mongosh -u ${mongo_user} -p ${mongo_pass} --authenticationDatabase admin --host ${shard2_master} --port ${shard2_port} db1

echo "db.bbb.countDocuments();" | mongosh -u ${mongo_user} -p ${mongo_pass} --authenticationDatabase admin --host ${shard2_master} --port ${shard2_port} db1

- 若所有的数据都集中在了一个分片副本上,可能的原因如下(示例

db1.bbb按照age范围分片,则数据全集中在shard1就是因为原因2,而db1.aaa按照name哈希分片,所以数据分散在2个shard上):

原因1:系统繁忙,正在分片中

原因2:数据块(chunk)没有填满,默认chunk大小64M(可以调整),填满后才会向其他分片填充数据

2.6.搭建第二个 mongos 路由

- 第二个mongos路由节点:启动 mongos 服务

#.获取配置文件中的参数

source /opt/.mongo.config

#.修改配置文件

mkdir -p ${workdir}/mongo_router_${mongos_port}/{data,log,pid}

curl -sL http://iso.sqlfans.cn/mongodb/config/keyfile_2021_tmp.txt -o ${workdir}/mongo_router_${mongos_port}/data/keyfile

chmod 600 ${workdir}/mongo_router_${mongos_port}/data/keyfile

#.添加mongos的特有参数

cat > ${workdir}/mongo_router_${mongos_port}/mongod_${mongos_port}.conf <<EOF

quiet=false

timeStampFormat=iso8601-local

logappend=true

fork=true

port=${mongos_port}

bind_ip=0.0.0.0

slowms=500

maxConns=8000

keyFile=${workdir}/mongo_router_${mongos_port}/data/keyfile

pidfilepath=${workdir}/mongo_router_${mongos_port}/pid/mongod.pid

logpath=${workdir}/mongo_router_${mongos_port}/log/mongod.log

configdb=set${config_port}/${config_master}:${config_port},${config_slave2}:${config_port},${config_slave3}:${config_port}

EOF

#.用mongos启动服务(不是mongod哦)

chown -R mongodb.mongodb ${workdir}/mongo_router_${mongos_port}

sudo -u mongodb numactl --interleave=all /usr/local/mongodb6/bin/mongos -f ${workdir}/mongo_router_${mongos_port}/mongod_${mongos_port}.conf

#.添加到开机启动

cat /etc/rc.local | grep ${mongos_port} || echo "sudo -u mongodb numactl --interleave=all /usr/local/mongodb6/bin/mongos -f ${workdir}/mongo_router_${mongos_port}/mongod_${mongos_port}.conf" >> /etc/rc.local

- 客户端登录mongos:发现第二个路由无需配置,因为分片配置都保存到了配置服务器中了

#.登录到mongos节点(登录到shard节点则无效)

echo "sh.status();" | mongosh -u ${mongo_user} -p ${mongo_pass} --authenticationDatabase admin --host ${mongos2_master} --port ${mongos_port} admin

- 附:

sh.status()部分返回如下

[direct: mongos] admin> sh.status();

{

database: {

_id: 'db1',

primary: 'set3701',

partitioned: false,

version: {

uuid: new UUID("5701a088-f8f0-4444-bc79-b2cc315c24a8"),

timestamp: Timestamp({ t: 1726828578, i: 1 }),

lastMod: 1

}

},

collections: {

'db1.aaa': {

shardKey: { username: 'hashed' },

unique: false,

balancing: true,

chunkMetadata: [

{ shard: 'set3701', nChunks: 2 },

{ shard: 'set3702', nChunks: 2 }

],

chunks: [

{ min: { username: MinKey() }, max: { username: Long("-4611686018427387902") }, 'on shard': 'set3701', 'last modified': Timestamp({ t: 1, i: 0 }) },

{ min: { username: Long("-4611686018427387902") }, max: { username: Long("0") }, 'on shard': 'set3701', 'last modified': Timestamp({ t: 1, i: 1 }) },

{ min: { username: Long("0") }, max: { username: Long("4611686018427387902") }, 'on shard': 'set3702', 'last modified': Timestamp({ t: 1, i: 2 }) },

{ min: { username: Long("4611686018427387902") }, max: { username: MaxKey() }, 'on shard': 'set3702', 'last modified': Timestamp({ t: 1, i: 3 }) }

],

tags: []

},

'db1.bbb': {

shardKey: { age: 1 },

unique: false,

balancing: true,

chunkMetadata: [ { shard: 'set3701', nChunks: 1 } ],

chunks: [

{ min: { age: MinKey() }, max: { age: MaxKey() }, 'on shard': 'set3701', 'last modified': Timestamp({ t: 1, i: 0 }) }

],

tags: []

}

}

}

2.7.灾难演练

- 移除shard分片(示例

set3702),移除分片之后,被移除分片中的数据会被自动迁移到其他分片中

#.客户端登录mongos(注:测试发现要执行2遍才成功)

use admin;

db.runCommand({removeShard: "set3702"});

db.runCommand({removeShard: "set3702"});

- 添加空的shard分片(示例

set3702)

#.客户端登录mongos

use admin;

sh.addShard("set3702/192.168.31.101:3702,192.168.31.102:3702,192.168.31.103:3702");

- 添加分片之后,建议在业务低峰期执行负载均衡任务

附录

遇到的问题

- 问题1:登录mongos节点,由于 shard1 这个3节点副本集有1个节点为仲裁节点,添加分片报错如下:

[root@localhost ~]# mongosh -u ${mongo_user} -p ${mongo_pass} --authenticationDatabase admin --host ${mongos1_master} --port ${mongos_port} admin

[direct: mongos] admin> use admin;

already on db admin

[direct: mongos] admin> sh.addShard("set3701/192.168.31.101:3701,192.168.31.102:3701,192.168.31.103:3701");

MongoServerError: Cannot add set3701/192.168.31.101:3701,192.168.31.102:3701,192.168.31.103:3701 as a shard since the implicit default write concern on this shard is set to {w : 1},

because number of arbiters in the shard's configuration caused the number of writable voting members not to be strictly more than the voting majority.

Change the shard configuration or set the cluster-wide write concern using the setDefaultRWConcern command and try again.

- 解决1:若副本集中承载数据的成员少于或等于两个,则需要调整setDefaultRWConcern将写入安全级别调低,示例如下:

use admin;

db.adminCommand({"setDefaultRWConcern":1,"defaultWriteConcern":{"w":1}});

- 问题2:所有节点发生重启,已配置的开机启动失败(按照部署过程配置的启动顺序),示例 节点1的

/etc/rc.local

sudo -u mongodb numactl --interleave=all /usr/local/mongodb6/bin/mongod -f /data/mongo_shard_3701/mongod_3701.conf

sudo -u mongodb numactl --interleave=all /usr/local/mongodb6/bin/mongod -f /data/mongo_shard_3702/mongod_3702.conf

sudo -u mongodb numactl --interleave=all /usr/local/mongodb6/bin/mongod -f /data/mongo_config_3718/mongod_3718.conf

sudo -u mongodb numactl --interleave=all /usr/local/mongodb6/bin/mongos -f /data/mongo_router_3717/mongod_3717.conf

- 解决2:经过多次尝试,调整如下启动顺序就ok了(注:congfig进程必须第一个启动)

sudo -u mongodb numactl --interleave=all /usr/local/mongodb6/bin/mongod -f /data/mongo_config_3718/mongod_3718.conf

sudo -u mongodb numactl --interleave=all /usr/local/mongodb6/bin/mongos -f /data/mongo_router_3717/mongod_3717.conf

sudo -u mongodb numactl --interleave=all /usr/local/mongodb6/bin/mongod -f /data/mongo_shard_3701/mongod_3701.conf

sudo -u mongodb numactl --interleave=all /usr/local/mongodb6/bin/mongod -f /data/mongo_shard_3702/mongod_3702.conf

如何配置连接字符串

- 由于 mongos 充当查询路由器,在客户端应用程序和分片集群之间提供接口,所以业务代码请使用 mongos路由节点的ip及端口,不要使用具体的shard分片集地址

- 本示例的 节点1:3717、节点2:3717 均部署了mongos,使用任一个节点均可

mongodb://user:******@192.168.31.101:3717/db1?authSource=admin&replicaSet=set3717